How Cloudflare Won With Critical Crisis Comms Choices

Earlier this week, large chunks of the internet were down, not down to AWS, but, this time it was Cloudflare who experienced a major service outage and impacted businesses (I’m sure some of you were impacted unfortunately 😔) and institutions that route traffic through its network.

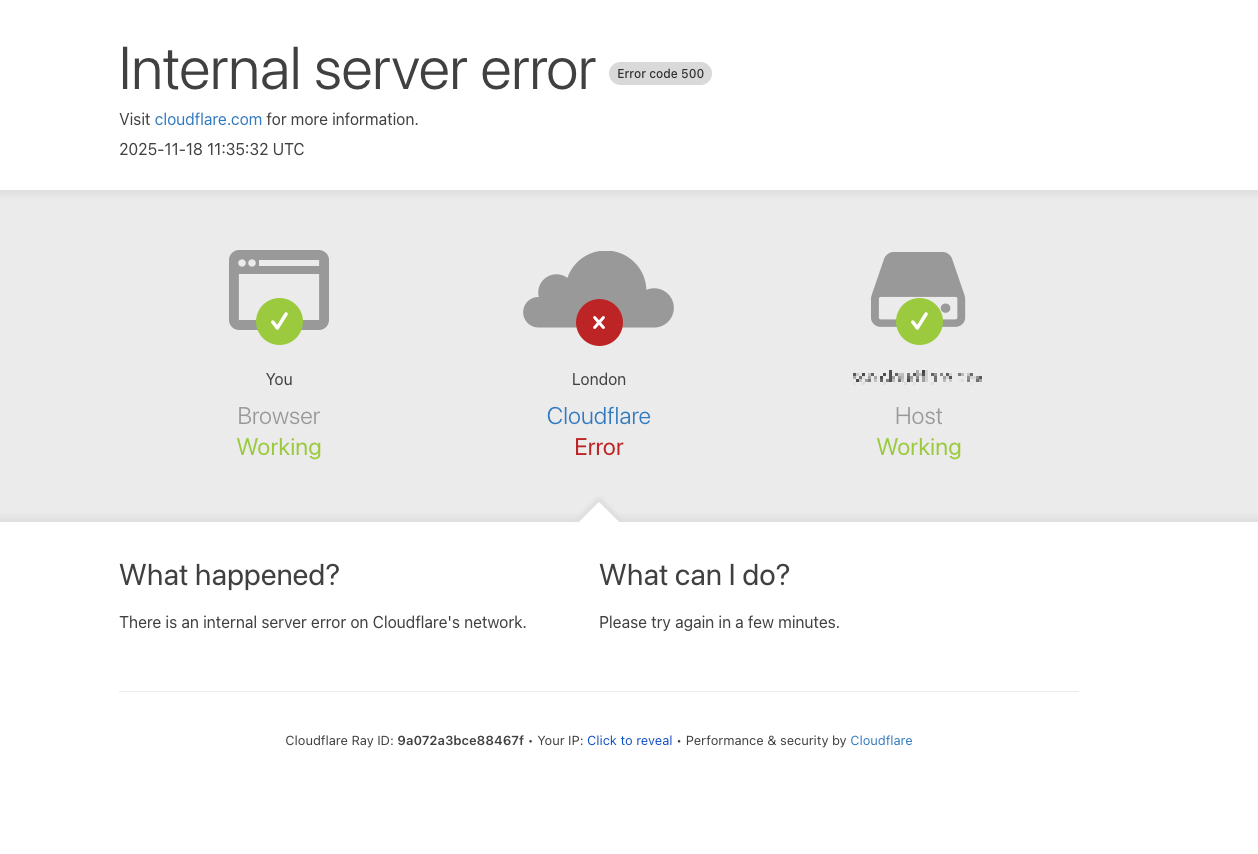

Large chunks of the web saw this error message throughout Tuesday

Cloudflare communications throughout the crisis showed how important the written word still is, actually admitting a misdiagnosis of a DDos attack but then clarifying impressively through a detailed blog post and series of tweets.

Crisis comms is hard, my former teams and I had to navigate a number of difficult situations (unfortunately including loss of life, terror attacks, cyber attacks etc) and you rarely get it right, and never get it right for everyone, but in this instance Cloudflare almost did (albeit with muscle memory from their previous incidents) and as Marketing and Business execs we should learn from this.

In modern day crisis comms companies are far too quick to send out social updates or in a rush to make the CEO to create a quick but polished selfie videos (often selecting over communicating vs focused communications) and then disappear once the issue is fixed with no follow up.

I remember the last big issue I was asked to advise on and it already had over 200 comments and 300 corrections on the shared document, with no clear goal and a botched social apology video, it was quickly addressed with a quick timeline, a simple table and a honestly written email apologising.

In Cloudflare’s case they showed why their apology and explainer needed to be a blog post - proving why the written word is still the hardest but most precise form of communication.

The Cloudflare Blog Post & Problems Broken Down:

Trigger Event: A change in database permissions caused the Bot Management system’s feature file to double in size, exceeding pre-set system limits and causing critical software failures throughout the network.

Impact: Core CDN, security services, login/authentication, Workers KV, Access, and sections of Cloudflare’s dashboard suffered major disruptions, with widespread HTTP 5xx errors negatively affecting customer sites and users globally.

Diagnosis Challenges: The presence of intermittent recovery and failure cycles, caused by fluctuating “good” and “bad” configuration files, made it difficult to immediately diagnose and resolve the issue.

Initial Misattribution: The outage coincided with the Cloudflare status page going down (hosted externally), leading to initial suspicions of a large-scale DDoS attack before the real root cause was pinpointed.

Resolution Steps: Cloudflare mitigated the outage by halting the propagation of the malformed file, manually restoring a known good configuration, restarting services, and committing to harden future configuration file ingestion and error recovery mechanisms.

The Breakdown

Power Of The Written Word In Crisis Comms

Precision over performance - The post has to explain exactly what went wrong ClickHouse permissions, feature files, duplicate rows, FL vs FL2, memory limits, and timelines down to the minute. That level of precision and technical specificity is almost impossible to hold clearly in a video without either oversimplifying or losing the audience in jargon. They had to explain to multi billion dollar companies and down to bloggers why their site was down and why they couldn’t transact

Asynchronous, re‑readable accountability - Customers, engineers, journalists and regulators can all read, re‑read, quote and challenge specific sentences. You can’t create a video in the same granular way or easily cite an exact line in a Slack or incident review. This blog post becomes a durable, inspectable artefact of accountability

Layered audience needs in one artefact - This single article speaks simultaneously to:

Non‑technical customers - “we’re sorry, we know this is unacceptable”

Technical buyers - detailed root cause and architecture

Internal teams - clear timeline, next steps

Importantly the written format lets each persona skim for what they need. In a video for instance, you’re trapped in one linear narrative or have to show with words, pictures and graphics .

Tone control and nuance - The apology is carefully worded: acknowledging pain, avoiding any defensiveness, explaining their own missteps (“we wrongly suspected a DDoS at first”), and showing learning without sounding panicked. Their written language gives them time to calibrate that tone word‑by‑word

Structure that mirrors incident thinking - Sections like “The outage”, “How Cloudflare processes requests, and how this went wrong today”, “The query behaviour change”, “Remediation and follow‑up steps” follow the mental model of an incident post‑mortem. Writing lets you explicitly signpost that structure so readers can jump straight to impact, root cause or remediation

Why This Had To Be A Blog Post

(Not just a tweet, a link to a status page, or sharing screenshots of an internal doc)

It’s both public and canonical: A blog post is public like a press release but technical like an internal RCA (root cause analysis). It becomes the canonical explanation customers, media and the industry will reference, something you don’t get from a scattered thread or after event email. Listed companies have to serve their shareholder first, then their customers and finally their team. This approach they serve all three in one detailed approach.

It’s long‑form by design: The story requires:

Real context - Cloudflare’s role in the Internet and how seriously they take their role

The impact across multiple products and services

Deep technical root cause

A precise timeline - often helping others to learn and showing control over the situation

Offering concrete remediation bullet points

Only a long‑form written format comfortably holds that depth without feeling bloated

It’s searchable and quotable: Engineers, SREs and journalists will Google this incident for years or the next time something happens. They need a URL, headings, and strong copy they can quote directly in docs, tickets and articles. A blog post is built for that, videos, status update pages, tweets and other social updates cannot offer this.

Why Video Would Struggle To Carry The Nuance & The Sentiment Needed

Cognitive load vs detail - Reading lets you slow down on dense parts (e.g. ClickHouse schemas, feature file duplication, memory pre‑allocation) and skim what you don’t need. In video, this level of technical depth becomes overwhelming, or you dilute it so far that it stops being a credible source

Granular trust signals - Trust in a crisis comes from specificity: timestamps, specific error codes, internal mitigation steps, exact paths, while even snippets of the panic message. Those are much easier to trust when you can see them written, copy them, and map them onto your own systems. When something of this magnitude happens internal IT and engineers are held responsible, they have to be able to explain in simple terms.

Non‑linear consumption - Different stakeholders need different parts right now:

Execs: apology, high‑level cause, reassurance, actions taken

Engineers: architecture, query change, limits, code

Ops: incident timeline, mitigation sequence

Written content allows non‑linear navigation; video forces everyone through a linear story arc or creating and editing long form content.

Risk of over‑indexing on performance - Video pushes you towards performance (charisma, body language, optics). In a technical, high‑impact outage, those signals can backfire – “are they performing or being transparent?” - “What did they mean by this gesture?”

The stripped‑back nature of a blog post emphasises facts, not theatrics.Update and longevity - If they discover a nuance later or need to correct a detail, editing and annotating the blog post is trivial and transparent. Updating a video is slower, more opaque, and fragments the canonical story.

Here’s a breakdown of how they created this post modem (via Reddit)

The Crisis‑Comms Lesson You Can Learn From & Use With Your Role

Cloudflare’s outage write‑up is a reminder that in serious technical crises, the most trusted medium is still a carefully structured, long‑form written post‑mortem: one artefact that combines apology, technical depth and a clear action plan in a format people can search, quote and revisit long after the incident has passed.

In the future, you have the option to use this framing as a template, you can move beyond war rooms and crisis meetings and use this structure for you.

🗣️ If someone would benefit from reading this crisis comms breakdown, please copy and paste marketingunfiltered.co/p/why-cloudflare-won-with-critical into your work chat app (Teams, Slack) or industry group (WhatsApp, iMessage or LinkedIn)

This was my latest newsletter post on Marketing Unfiltered, my weekly newsletter for Marketing, Growth & Business Leaders.