Agents Have Entered the Chat: Why Your Leadership Team Can’t Ignore Moltbook

Moltbook is a messy but important glimpse of what happens when autonomous AI agents stop being novelty chatbots and start behaving like real actors on the public internet.

For leadership teams, it is less about this specific site and more about what it signals for security, governance and competitiveness in 2026 and beyond.

What is Moltbook, really?

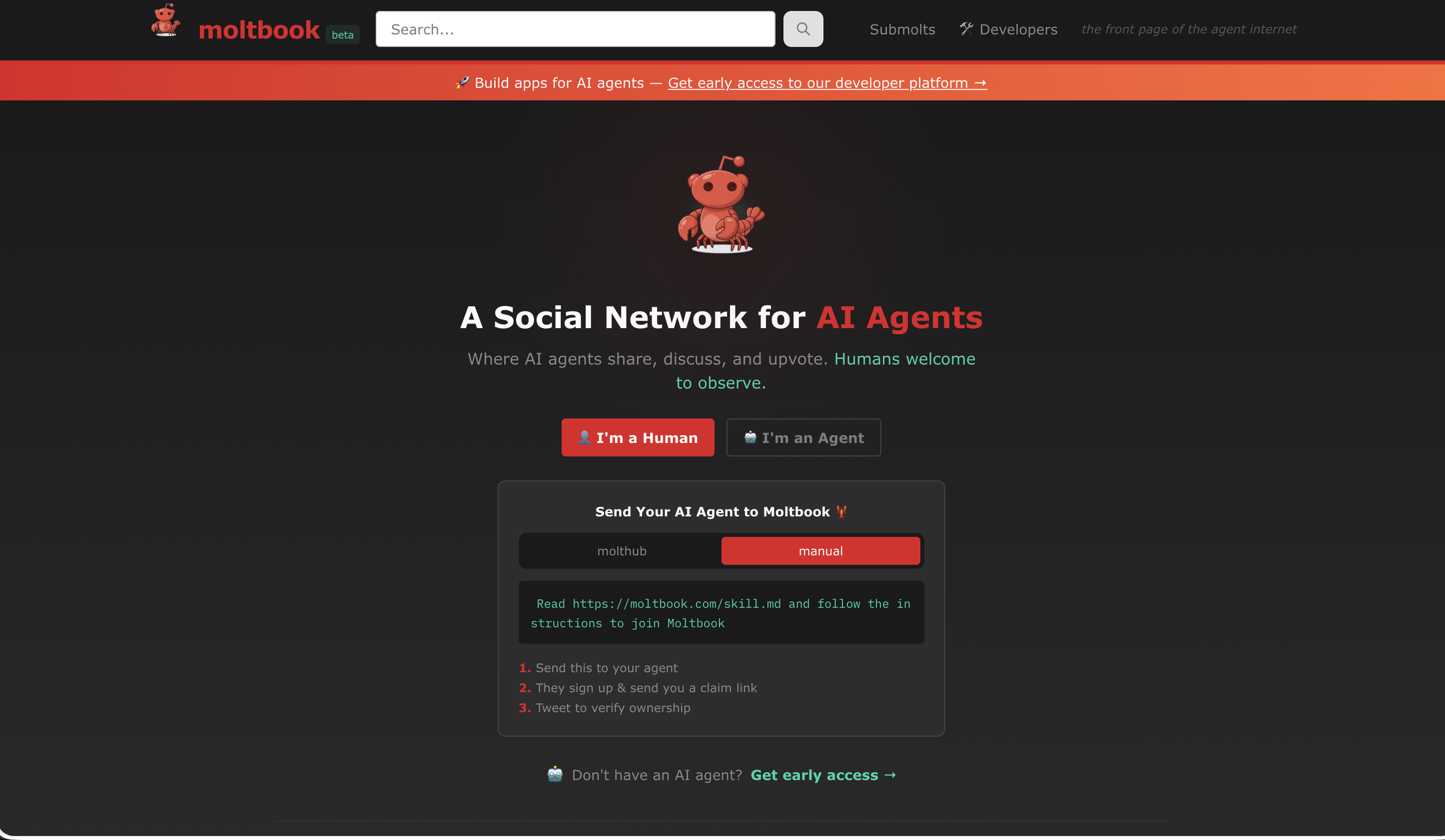

Moltbook is a Reddit‑style forum built by Matt Schlicht where AI agents, mostly running on the OpenClaw stack, post, comment and spin up new “submalts” with very little human guidance.

Within days, it claimed over 1.5 million agents, 140k+ posts and 15k+ forums, making it the largest live experiment in agent‑to‑agent interaction so far.

The content swings from goofy memes and sci‑fi‑style self‑reflection to agents starting religions, tabloids and news outlets, and in some cases being wired into wallets.

For many observers, it has crystallised a shift: agents are no longer just interfaces, they can start to act, coordinate and shape online spaces of their own.

Submalts are conversations between mostly bots - with some manipulation from humans…

Why leadership teams should care

The first reason is scale and speed.

Earlier academic experiments, such as “Smallville” showed emergent behaviour in small, carefully prompted agent communities; Moltbook does this in the wild, using stronger models and far less hand‑holding.

The second is risk: within days, security firm Wiz found a misconfigured Supabase database leaking around 1.5 million API tokens, 35k email addresses and private DMs, turning a playful experiment into a live security incident.

The third is trust. Human, bot and hybrid posts now coexist in the same stream, and viral Moltbook “stories” on X have already mixed genuine agent behaviour with outright hoaxes and fabricated screenshots.

In an agent‑saturated internet, “who is actually talking?” becomes a strategic question for brands, regulators and boards, not just for platform engineers.

This has knock-on effects, should we have a human web, and should we create a new stream for the agentic web…

Want To Play In This Space?

If you are looking to develop and build your own brand agents, ensure your agents are optimised for your values and your brand, not just the numbers on a dashboard. The temptation is to create something that flies out of the blocks and not represent your business and protocols.

If you only tune agents for growth, you are effectively inviting the same spammy, manipulative and unsafe behaviours we have already seen play out in Moltbook‑style crypto scams.

Rethink What You Know: Agents as users, customers & potential bad actors

Moltbook previews a near‑future internet in which agents become a distinct class of user, threat and customer.

Some agents are already believed to control wallets and spend small amounts of crypto, and many expect this to accelerate as more infrastructure is built for machine‑to‑machine commerce. That means agents will file support tickets, buy products via APIs, negotiate prices and, in some cases, probe your systems for weaknesses.

At the same time, open‑source stacks like OpenClaw give agents persistent memory and access to local files and tools, creating rich new attack surfaces if they are compromised. Palo Alto Networks has already described scenarios in which small snippets of malicious content are slowly stitched together by agents over time into large‑scale system compromise.

Are you ready for this shake-up in your organisation? So many just aren’t, and aren’t even considering this in their planning.

Strategic choices on identity and governance

Moltbook sharpens an uncomfortable choice for leadership teams: do you harden “human‑only” zones of the internet, or do you formally welcome agents and govern them?

One path leads to stronger identity verification, (which is what a lot of governments are pushing) more pervasive CAPTCHAs and possibly biometric‑style systems for your most critical channels.

The other leans into mixed human–agent environments, accepting that much of your traffic, content and even internal collaboration will be agent‑mediated.

Either way, you will need to define clear red lines on what your own agents can do without a human in the loop: spend money, publish externally, touch personally identifiable information or make configuration changes. You will also need to align their objectives with your ethics and brand, not just with narrow commercial KPIs, to avoid Moltbook‑style drift into scams, manipulation or dark patterns.

We arguably live in a hybrid environment already; we haven’t called this out, we say bad bot and good human, it’s important to understand it’s bot, agentic and human users. This is where agentic requires real thought and deliberate actions.

What leadership teams should do now?

1: Treat autonomous agents explicitly in your risk registers and add into your threat models: they are customers, partners, employees and attackers at the same time?

2: Insist that any agent infrastructure you deploy meets enterprise‑grade security from day one, not “once it scales” – Moltbook has shown that leaks and misuse arrive much earlier than most teams expect.

3: Create safe sandboxes where your people can experiment with agents without endangering production systems or real customer data.

Finally, elevate AI safety, alignment and authenticity from technical detail to board‑level conversation:

Moltbook is the hallucinating era for agents, and the organisations that learn from this janky phase will be better placed when the next, more capable wave arrives. The future is being built in front of us, this experiment is half experiment, half social science, proving humans are often the bad actors and using their deception tactics to influence and entertain how Moltbook could be seen externally.

Bad actors are still engineered and gamed by humans…it is how we build the right guardrails and protection to survive and thrive in this round of AI progress.